DFKI-NLP is a Natural Language Processing group of researchers, software engineers and students at the Berlin office of the German Research Center for Artificial Intelligence (DFKI). We’re working on basic and applied research in areas covering, among others, information extraction, knowledge base population, dialogue, sentiment analysis, and summarization, across various domains such as health, media, and science. We are particularly interested in core research on learning in low-resource settings, reasoning over larger contexts, and continual learning. We strive for a deeper understanding of human language and thinking, with the goal of developing novel methods for processing and generating human language text, speech, and knowledge. An important part of our work is the creation of corpora, the evaluation of NLP datasets and tasks, and explainability research.

Key topics:

- Applied / domain-specific NLP

- Evaluation methodology research

- Dataset construction, linguistic annotation, synthetic data generation

- Learning in low-resource settings and over large contexts

- Multilingual NLP

- Explainability

Our group forms a part of DFKI’s Speech and Language Technology department led by Prof. Sebastian Möller, and closely collaborates with e.g. the Quality and Usability chair of Technische Universität Berlin, DFKI’s Multilinguality and Language Technology department and the XPlaiNLP group of TU Berlin.

Latest News

Two papers from researchers in the TRAILS project have been accepted as Main and Findings papers at the 2025 Conference on Empirical Methods in Natural Language Processing (EMNLP 2025). EMNLP will take place November 4-9 in Suzhou, China. The first paper, titled “Multilingual Datasets for Custom Input Extraction and Explanation Requests Parsing in Conversational XAI Systems”, introduces two multilingual datasets in the context of Conversational XAI systems, one for intent recognition, and one for slot filling / input extraction. The second paper investigates political bias in LLMs through exchanging words in minimal sentence pairs with euphemisms or dysphemisms in German claims.

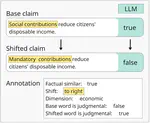

One paper by Qianli Wang and Nils Feldhus has been accepted to the Findings Track at the 63rd Annual Meeting of the Association for Computational Linguistics 2025 (ACL 2025). In the paper, they introduce ZeroCF, a faithful approach for leveraging important words derived from feature attribution methods to generate counterfactual examples in a zero-shot setting. Second, they present a new framework, FitCF, which further verifies aforementioned counterfactuals by label flip verification and then inserts them as demonstrations for few-shot prompting.

One paper originating from research in the TRAILS project has been accepted to the 10th Workshop on Representation Learning for NLP (RepL4NLP 2025), co-located with NAACL 2025. In the paper, we reimagine classical probing to evaluate knowledge transfer from simple source to more complex target tasks. Instead of probing frozen representations from a complex source task on diverse simple target probing tasks (as usually done in probing), we explore the effectiveness of embeddings from multiple simple source tasks on a single target task. Our findings reveal that task embeddings vary significantly in utility for coreference resolution, with semantic similarity tasks (e.g., paraphrase detection) proving most beneficial. Additionally, representations from intermediate layers of fine-tuned models often outperform those from final layers.

One paper from researchers in the DFKI-NLP group has been accepted as a Findings paper at the 2024 Conference on Empirical Methods in Natural Language Processing (EMNLP 2024). EMNLP will take place November 12-16 in Miami, Florida. The paper presents CoXQL, a dataset for user intent recognition for conversational XAI systems, covering 31 intents, seven of which require filling multiple slots. The paper also presents an improved parsing approach for intent recognition and slot filling on this dataset, which is evaluated using different LLMs.

Projects

Datasets

We introduce a German-language dataset comprising Frequently Asked Question-Answer pairs: raw FAQ drafts, their revisions by professional editors and LLM generated revisions. The data was used to investigate the use of large language models (LLMs) to enhance the editorial process of rewriting customer help pages. The corpus comprises 56 question-answer pairs addressing potential customer inquiries across various topics. For each FAQ pair, a raw input is provided by specialized departments, and a rewritten gold output is crafted by a professional editor of Deutsche Telekom. The final dataset also includes LLM generated FAQ-pairs. Please see our paper accepted at INLG 20204, Tokyo, Japan. You can find the Github repo containing the dataset here https://github.com/DFKI-NLP/faq-rewrites-llms.

MultiTACRED is a multilingual version of the large-scale TAC Relation Extraction Dataset. It covers 12 typologically diverse languages from 9 language families, and was created by machine-translating the instances of the original TACRED dataset and automatically projecting their entity annotations. For details of the original TACRED’s data collection and annotation process, see the Stanford paper. Translations are syntactically validated by checking the correctness of the XML tag markup. Any translations with an invalid tag structure, e.g. missing or invalid head or tail tag pairs, are discarded (on average, 2.3% of the instances). Languages covered are: Arabic, Chinese, Finnish, French, German, Hindi, Hungarian, Japanese, Polish, Russian, Spanish, Turkish. Intended use is supervised relation classification. Audience - researchers. The dataset will be released via the LDC (link will follow). Please see our ACL paper for full details. You can find the Github repo containing the translation and experiment code here https://github.com/DFKI-NLP/MultiTACRED.

Ex4CDS are explanations (or more precisely justifications) of physicians in the context of clinical decision support. In the course of a larger study, physicians estimated the probability of different clinical outcomes in nephology, namely rejection, graft loss and infections, within the next 90 days. Each estimation had to be justified within a short text - these are our explanations. The explanations were provided in German and have strong similarities to general clinical notes. You can find a description and the data here: https://github.com/DFKI-NLP/Ex4CDS

In this work, we present the first corpus for German Adverse Drug Reaction (ADR) detection in patient-generated content. The data consists of 4,169 binary annotated documents from a German patient forum, where users talk about health issues and get advice from medical doctors. As is common in social media data in this domain, the class labels of the corpus are very imbalanced. This and a high topic imbalance make it a very challenging dataset, since often, the same symptom can have several causes and is not always related to a medication intake. We aim to encourage further multi-lingual efforts in the domain of ADR detection. More info: https://aclanthology.org/2022.lrec-1.388/

This repository contains corpus called MobASA: a novel German-language corpus of tweets annotated with their relevance for public transportation, and with sentiment towards aspects related to barrier-free travel. We identified and labeled topics important for passengers limited in their mobility due to disability, age, or when travelling with young children. The data can be used for as a training or test corpus for aspect-oriented sentiment analysis. Moreover, the corpus can benefit building inclusive public transportation systems. You can find the corpus here: https://github.com/DFKI-NLP/sim3s-corpus, and the description of the corpus here: https://aclanthology.org/2022.csrnlp-1.5.pdf

This repository contains the DFKI MobIE Corpus (formerly “DAYSTREAM Corpus”), a dataset of 3,232 German-language documents collected between May 2015 - Apr 2019 that have been annotated with fine-grained geo-entities, such as location-street, location-stop and location-route, as well as standard named entity types (organization, date, number, etc). All location-related entities have been linked to either Open Street Map identifiers or database ids of Deutsche Bahn / Rhein-Main-Verkehrsverbund. The corpus has also been annotated with a set of 7 traffic-related n-ary relations and events, such as Accidents, Traffic jams, and Canceled Routes. It consists of Twitter messages, and traffic reports from e.g. radio stations, police and public transport providers. It allows for training and evaluating both named entity recognition algorithms that aim for fine-grained typing of geo-entities, entity linking of these entities, as well as n-ary relation extraction systems. You can find the description of the corpus here: https://www.dfki.de/web/forschung/projekte-publikationen/publikationen-uebersicht/publikation/11741/

Contact

-

Salzufer 15/16

10587 Berlin - Enter Salzufer 15/16 and take the elevator to Reception on Floor 4

- 9:00 to 17:00 Monday to Friday